- Huntbase Blog

- Posts

- The Integration Bottleneck in Cybersecurity: An Old Problem with New Urgency

The Integration Bottleneck in Cybersecurity: An Old Problem with New Urgency

I still remember a tense incident where our team was juggling five different sources of data from the clients tooling, trying to manually correlate data between them. The frustration was palpable: these tools should have been speaking to each other, but instead our analysts were stuck copying outputs from one system to feed into another (a giant spreadsheet). Connecting disparate systems is not a new challenge in IT — industries from finance to retail have long wrestled with integrating CRMs, ERPs, and data warehouses. But in cybersecurity, this integration problem has taken on a new urgency. An explosion of specialized security products, an unforgiving threat landscape, and the need for real-time response have all converged to turn the integration bottleneck into a critical pain point for CISOs and security product teams alike. In this post, we’ll dive deep into why integration is so hard in cybersecurity today, where traditional solutions fall short, and how emerging approaches are aiming to bridge the gap. Along the way, I’ll share insights that blend technical detail with strategic perspective — and a bit of hard-earned personal experience — to help both builders and buyers navigate this challenge

Integration Challenges: Old Problem, New Urgency

Cybersecurity teams are deploying more tools than ever, from cloud security platforms to endpoint agents to threat intel feeds. By one count, there are over 3,700 cybersecurity vendors offering more than 8,000 products [2]. It’s not uncommon for an enterprise SOC to juggle dozens of security tools, each with its own data formats and APIs. The result? A “chaotic” integration landscape where “security APIs and docs are poorly architected” and “data normalization across dozens of tools is painful,” as one startup put it bluntly [5]. In practice, many tools don’t naturally work together — they produce siloed data and have proprietary interfaces, leaving integration heavy-lifting to already frazzled security teams [2].

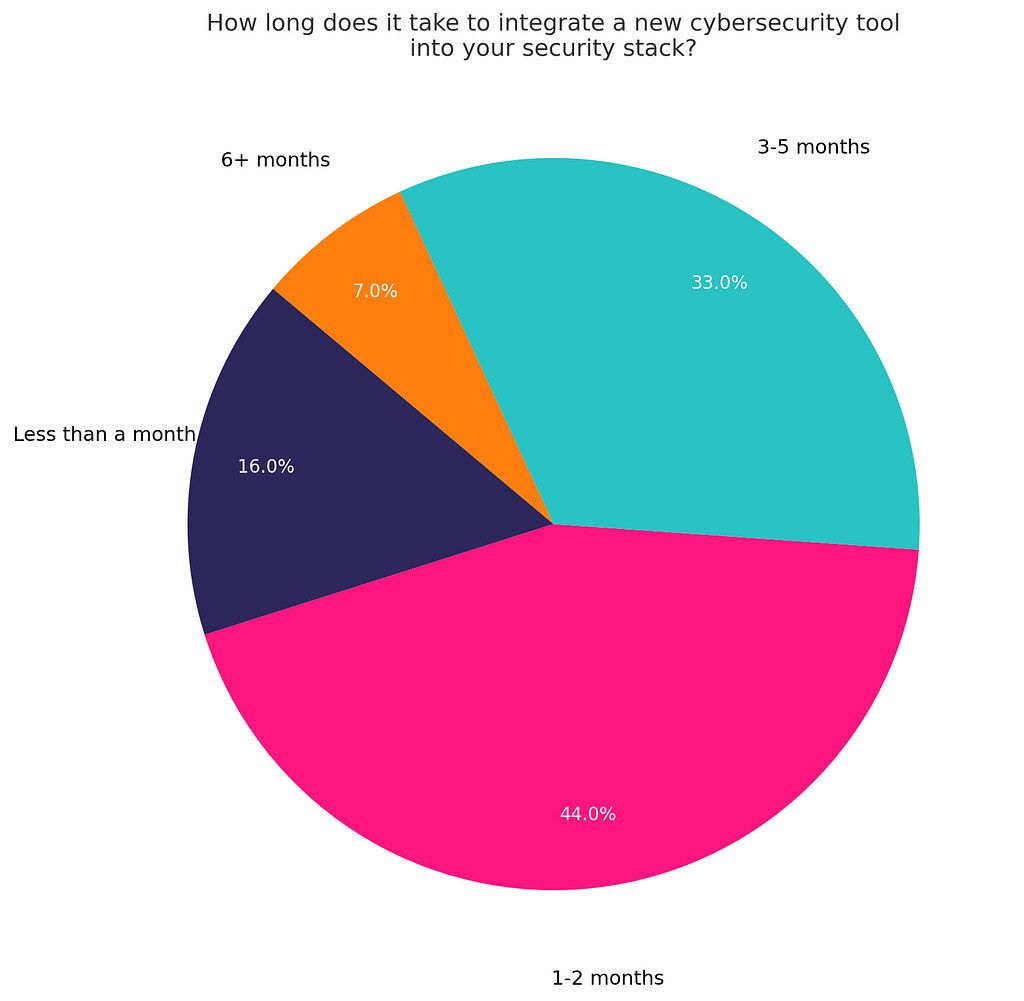

This problem isn’t new in concept. Enterprise IT folks will recall the era of enterprise service busses and middleware meant to connect ERP systems. But in cybersecurity, the stakes and pace are higher. New threats emerge constantly, pushing teams to adopt new tools rapidly. In fact, 51% of security leaders now prioritize integration capabilities when evaluating a new security product — a recognition that even the most advanced tool is of limited value if it can’t share data or trigger actions in the rest of the stack. Yet integrating a new cybersecurity tool is often a slow, painful process. One industry survey found that onboarding a new security product into an existing stack typically takes 1–5 months on average [1]. That means by the time a new tool is fully wired into alerting workflows or data pipelines, the threats it was meant to catch may have evolved or the team has moved on to the next shiny solution.

The consequences of this integration bottleneck are severe. Valuable tools turn into shelfware because “they don’t integrate well… or they’re too labor-intensive to implement,” as one CISO observed [2]. Security staff get overwhelmed swiveling between consoles, leading to missed alerts and inconsistent responses. In the worst cases, lack of integration creates gaps in defenses — for example, threat data stuck in one system never reaches the SIEM for correlation, or an unintegrated network sensor can’t trigger an endpoint isolation in time. It’s no wonder one security leader noted that piling on more tools without integration “has exacerbated vulnerabilities rather than alleviated them” [2]. The urgency is clear: to improve both efficiency and security outcomes, the industry must untangle this integration knot, and fast.

Scaling Integration Pipelines (Securely) is Hard

Why is integration so challenging to scale in cybersecurity? The core issues will sound familiar to any integration engineer, but they are compounded by the volume and sensitivity of security data. Some common hurdles include:

Lengthy Development: Writing custom code for each new integration or API integration takes time. Each tool added to the ecosystem extends the development timeline — a problem when clients or internal teams demand quick support for the “latest” tool [3].

High Cost & Maintenance: Manually coding and testing integrations isn’t just slow, it’s expensive. Every custom connector requires engineering effort to build and ongoing maintenance to keep up with API changes and version updates [3]. Over time, supporting dozens of integrations can eat up a huge chunk of a product team’s budget.

Error-Prone Processes: More custom code means more room for mistakes. Integration bugs can cause data to mis-map or fail to flow, and in security, such errors can translate to missed threats or false positives [3]. A minor coding error in an integration pipeline might create a security gap.

Compatibility Gaps: Perhaps the biggest headache is that “cybersecurity tools aren’t naturally compatible with each other” [3]. One tool might output JSON with a certain schema, another expects XML; one uses proprietary event codes, another uses a different taxonomy. The integration team ends up stitching together mismatched protocols and data models, essentially building translations for each tool pair.

For security product managers and platform architects, there’s an added twist: these integration pipelines multiply across many customers and use cases. Imagine you’re a security platform vendor providing a cloud service to 100 enterprise customers, and each customer wants to ingest data from 5 other security tools into your platform. That’s 500 individual data pipelines to manage! Ensuring data separation and strong security in this multi-tenant integration scenario is non-trivial. You must guarantee that Customer A’s data flowing in from, say, their on-prem IDS and being pushed to your platform never leaks to Customer B’s pipeline. Each integration needs proper isolation, authentication, and governance controls around it. Misconfiguring an API key or mixing up data streams could be a compliance nightmare.

Integration at scale also demands robust resilience. If a third-party vendor changes their API or an outage occurs, your system needs to handle it gracefully. It’s a fragile dependency problem. When SaaS platforms rely on many external APIs, a single change (like Slack altering an OAuth flow) can break integrations overnight. For security products, an API outage in a dependency could mean blind spots in threat monitoring during that window. Hence, teams have to design fault-tolerant integration pipelines — with retries, circuit breakers, sandbox testing environments, and careful versioning — all of which add complexity. It’s no surprise some security companies invest in extensive CI/CD and automated testing just for their integrations. For instance, Sacumen (a firm we’ll discuss more shortly) even built an AutoNXT system purely to automate testing of security connectors, recognizing how error-prone and critical this aspect is.

Integrating one or two security tools is manageable; integrating dozens in a reliable, scalable, and secure way is a massive engineering challenge. It requires not only connecting the technical plumbing, but also normalizing data formats, enforcing strict access controls, and constantly monitoring the health of each connector. This sets the stage for examining whether our traditional solutions — like SOAR platforms or generic integration services — are up to the task [3].

Why Traditional Solutions (SOAR & iPaaS) Fall Short

When faced with an integration conundrum, many security teams and vendors naturally turn to existing categories of solutions: SOAR platforms in the security world, or Integration-Platform-as-a-Service (iPaaS) tools more common in enterprise IT. These can be powerful, but each comes with limitations when applied to modern security use cases.

Security Orchestration, Automation, and Response (SOAR): SOAR tools (like Splunk Phantom, Palo Alto Cortex XSOAR, etc.) were practically invented to automate workflows between security products. They typically offer playbook editors, built-in connectors to popular tools, and case management features. In theory, a SOAR can glue together your firewall, EDR, SIEM, threat intel feed, and email gateway such that an alert from one triggers actions in all the others. The reality, however, can be less rosy:

Overkill for Simple Needs: These platforms are heavyweight by design — they aim to be the central brain of incident response. But not every integration need is a full incident workflow. If a product manager just wants to add a small automation inside their security SaaS (say, automatically enrich an alert with data from VirusTotal), deploying a full SOAR stack might be overkill. I’ve personally seen small security teams shy away from using their SOAR licenses because the overhead of managing a separate, complex system outweighed the benefits for their day-to-day tasks. This is especially true for smaller enterprises or SMBs, which may lack the dedicated staff to maintain a standalone orchestration engine.

Connector Availability: SOAR vendors support many integrations, but not everything. They tend to cover the common bases (major firewalls, SIEMs, endpoint tools, etc.). However, if your stack includes a niche product or a custom in-house tool, there’s no guarantee the SOAR has a pre-built connector. You might end up writing custom scripts or waiting on the SOAR vendor’s roadmap. In one of my past projects, we discovered that the SOAR could orchestrate across a dozen tools — except the one threat intel platform that our analysts relied on, which had no native connector. We had to build a workaround, negating a big part of the SOAR’s value.

Complexity and Cost: Implementing a SOAR is non-trivial. It often requires significant tuning, playbook building, and training. If not carefully governed, playbooks can become spaghetti code of their own. Additionally, these platforms don’t come cheap. For a security product vendor looking to embed automation, licensing a third-party SOAR to bundle into your product could blow your budget. In our case, we found a full SOAR solution “often overkill for our needs, particularly when simpler, single-product workflows were called for,” as one product manager recounted. The overhead of a broad SOAR platform didn’t justify itself for targeted integration tasks.

Integration-Platform-as-a-Service (iPaaS): Outside the strictly security realm, iPaaS solutions (think MuleSoft, Boomi, Workato, etc.) are the go-to for integrating cloud apps and databases. They provide drag-and-drop workflows, data transformation capabilities, and large libraries of connectors (Salesforce, SAP, Slack, you name it). Some security teams consider using iPaaS to handle security data integration as well, or product companies think about OEM-ing an iPaaS to offer integrations. These tools shine in general-purpose integration, but they have their own downsides in the security context:

Not Security-Savvy: General iPaaS platforms are built to solve broad integration problems (syncing CRM to ERP, etc.). They are not tailored for security data or workflows. As an example, an iPaaS might easily connect an HR database to Slack, but ask it to parse a Sysmon log or execute a containment action via an EDR API, and it may not have the activity pack or the domain knowledge to do so. One integration specialist noted that their value lies in maintained libraries and data mapping, but beyond common security apps like Splunk or CrowdStrike, out-of-the-box security connectors are scarce. This often leaves security teams in the same boat: building custom connectors, just now in a different UI.

Data Volume and Format: Security telemetry can be high-volume, real-time data (think millions of events per day). Some iPaaS tools are not optimized for streaming large volumes of log data or might introduce latency. They’re often geared toward transactional integration (e.g., when a new user is added in system A, update system B), rather than the firehose of alerts and events a SOC deals with.

Security and Compliance: Ironically, using a third-party integration service can raise security questions. If your iPaaS is cloud-hosted, do you feel comfortable piping sensitive breach data or personal identifying information through it? Many iPaaS vendors are very secure, but when dealing with, say, regulated financial breach data, some organizations prefer to keep all processing in-house. There’s also the issue of multi-tenancy — if you’re a security SaaS vendor, embedding an iPaaS means you now rely on their access controls to segregate different customers’ data. Any lapse could mean a data leak between clients.

Lack of Security Context: While iPaaS excels at data transformation (they can normalize dates, merge fields, etc.), they might not inherently understand security meaning. For instance, renaming fields to a common schema is doable, but recognizing that “Severity: High” in one tool should be treated as “Critical” in another, or that an IP from an email header should be checked against threat intel, requires security-specific logic that you’d still have to build. Some Extended Detection & Response (XDR) products used iPaaS-like pipelines for data aggregation and normalization — which is “critical for XDR products handling user data from several products,” as one strategy memo noted. The iPaaS provided the plumbing, but the XDR engineers still had to map and interpret the security semantics of the data.

None of this is to say SOAR and iPaaS are useless — far from it. They each solve parts of the puzzle. In fact, many organizations use these in tandem: perhaps a SOAR for incident workflows, and an iPaaS for connecting cloud apps or feeding data into a data lake. But the gap is evident: traditional tools either provide deep security automation with heavy overhead, or flexible integration with shallow security awareness. This gap has spurred innovation, with new tools and approaches emerging to specifically target the integration bottleneck in cybersecurity.

Bridging the Gap: Emerging Solutions and Approaches

Fortunately, the industry isn’t standing still. A number of companies and open-source projects have sprung up to tackle cybersecurity integration challenges in more agile ways. Here are a few noteworthy examples and what they bring to the table:

No-Code Security Automation (e.g. Tines): One promising approach is exemplified by platforms like Tines, which positions itself as a modern, lightweight alternative to legacy SOARs. Tines takes an API-centric stance — if a tool offers an API, Tines can connect to it directly without needing a pre-built “connector” in the traditional sense [6]. Essentially, it provides a no-code workflow builder where each step can be an API call, data transformation, or logic action. This means security teams can quickly set up integrations between tools by themselves, using API credentials, rather than waiting on vendor-specific connectors. Tines’ philosophy is to eliminate complex integration middleware and let you script tool-to-tool interactions in a simple, visual way. For security analysts, it’s empowering — they can automate a process (like extracting an indicator from an email and querying multiple threat intel services, then sending results to Slack) all in one place. The platform handles the authentication and sequencing, so analysts don’t have to write Python or maintain servers. By “enabling users to connect directly to any tool with an API, without the need for complex integrations,” Tines and similar tools drastically lower the barrier to integrate new products into workflows. Many security teams find this agility valuable when they need to respond to new threats or tools on the fly. While Tines is a standalone SaaS, it’s also conceivable to embed such no-code automation engines within a product’s UI to let end-users orchestrate their own integrations.

Embeddable Integration Engines (e.g. AppMixer): Speaking of embedding, another emerging trend is vendors integrating the integration capability itself into their products. Instead of expecting customers to configure an external SOAR or iPaaS, some security platforms are offering built-in workflow automation that is highly customizable. One approach is using embeddable integration frameworks like AppMixer. AppMixer is essentially an iPaaS that software vendors can embed into their own software, offering a drag-and-drop workflow editor and a library of connectors — all inside the vendor’s application. In my experience as a product manager, this was a revelation: it meant we could give our users the power of a mini-SOAR within our product, to string together our features with external API calls, without sending them to a third-party tool. AppMixer’s embeddable library allowed us (and others following this model) to provide custom workflow automation natively, tailoring it to our app’s context while the heavy lifting of connector maintenance was handled by the integration engine behind the scenes. This kind of approach bridges the gap between off-the-shelf integration and bespoke development — it’s more flexible than a fixed set of built-in integrations, but more user-friendly than writing code against our API. In practice, a security analyst using our product could, for example, create a workflow that takes an alert from our system, enriches it with a lookup to VirusTotal (via an AppMixer connector), and then creates a ticket in Jira — all through a UI, without coding. For vendors, such solutions can accelerate integration offerings significantly. (We still had to ensure our embedding was secure, e.g., no one tenant could ever affect another’s workflow or data, and we carefully scoped what each workflow can do.) The bottom line is that embeddable workflow engines allow security products to deliver integration as a feature of the product, increasing usability for end-users. AppMixer is one notable player here, but we’re also seeing larger automation platforms offering “embedded” editions for OEM use. I sat down with Jiri from AppMixer a few months ago to discuss this problem in more detail on their PM Talks Podcast.

Connector Factories and Integration Services (e.g. Sacumen): Not every company wants to build or integrate an engine — sometimes you just need lots of connectors fast. This demand has given rise to specialist firms like Sacumen, which focus on building connectors for security products. Sacumen essentially operates as a “connector factory” for the cybersecurity industry. Product companies hire them to develop and maintain integrations with various third-party tools. The scale of this need is illustrated by the numbers: Sacumen claims to have built over 900 custom connectors for different security products [3]. Their service (sometimes dubbed “Connector-as-a-Service”) offloads the work of creating and testing integrations. Importantly, Sacumen differentiates itself from generic iPaaS by a “laser-sharp focus on cybersecurity” — their engineers understand the nuances of SIEMs, threat intel feeds, incident management systems, etc., in a way general integration teams might not. For example, they have experience building certified apps for SIEMs like Splunk and QRadar, which can be tricky due to those platforms’ specific requirements. Using a service like this can help vendors rapidly expand their integration list without diverting their core development team. One SIEM vendor, for instance, used Sacumen’s low-code platform (SacuNXT) and saw integration development time drop dramatically — 67% faster time-to-market, 60% lower costs, and 95% fewer errors after adopting the solution. Those are eye-opening numbers that show what a focused integration effort can achieve. The existence of companies like Sacumen also highlights just how big the integration bottleneck is — there’s enough demand that an entire business can thrive purely on solving it for others. For security program managers, engaging such services can be a strategic shortcut to catch up on an integration backlog or offer a new integration faster to win a deal.

Unified Query Layers (e.g. osquery): Another way to ease integration pain is to standardize how we interact with a particular domain of systems. osquery is a great example of this approach for endpoint security. Developed by Facebook (Meta) and now widely used, osquery turns your operating system into a virtual SQL database — you can query system data (running processes, logged-in users, registry keys, etc.) using SQL syntax, across Windows, Linux, macOS in a unified way [4]. Why is this significant for integration? Because traditionally, gathering data from endpoints meant dealing with different APIs or agents for each OS or vendor. Osquery provides a normalized communication layer for endpoints: an open, universal interface. If you integrate osquery into your security operations, you can collect and correlate endpoint data without writing separate integrations for each platform. For example, a security analytics platform might use osquery on all endpoints to fetch software inventory or running processes. Rather than parsing Windows WMI, then writing a different parser for Mac launchctl, etc., the platform just issues standard osquery SQL and gets back results in a consistent format. This dramatically reduces the integration complexity on the endpoint side — one integration (to osquery) effectively covers a wide range of systems. It’s akin to having a common language that all endpoints speak. Many EDR and XDR products incorporate osquery under the hood for this reason. It’s worth noting that osquery is open-source and extensible; security teams can create custom tables and queries to extend its functionality. The success of osquery demonstrates the power of normalization in integration: by abstracting the differences of underlying systems behind a unified interface, you make life much easier for those building higher-level security analytics and response workflows.

Unified API Hubs (e.g. Leen, aka lean.dev): Expanding the idea of a unified layer, several startups are now offering Unified APIs for security products. One such example is Leen (often stylized as lean.ev), which provides a single API that aggregates and normalizes data from many different security tools. The idea is simple: instead of every security product vendor or integrator writing 50 different API clients to talk to 50 tools, you integrate once with the unified API, and it handles connecting to those various tools on the backend. Leen focuses on “unlocking security data from hundreds of tools with a single API”. Under the hood, it comes with pre-built connectors and, crucially, unified data models for categories of security tools (SIEM, cloud logs, identity providers, etc.). That means it not only funnels the data, but also presents it in a consistent schema regardless of source. As their site explains, “Unified APIs combine multiple APIs into a single, standardized interface… they abstract away complexity, standardize interactions, normalize data formats, handle security centrally, and offer scalability.”. Essentially, the unified API service worries about differences in vendor A vs vendor B, and gives you a common set of objects or events to work with. For developers of security platforms, this can be a huge win — it streamlines integrations by letting them code to one interface. For example, if your product needs to retrieve vulnerability scan results from various scanners, a unified API might let you fetch “Vulnerability” objects in one format, while it deals with whether the data came from Qualys, Nessus, or another source. Leen touts that this approach lets teams launch new integrations in “hours instead of weeks,” since so much of the groundwork is already handled. Of course, using a unified API means relying on that third-party service to keep connectors up to date and handle data securely, but for many the trade-off is worth it. It’s like adding an integration “layer” or broker into your architecture. Other players in this space are taking similar approaches, sometimes focused on specific domains (for instance, some provide unified APIs for cloud security posture across AWS/GCP/Azure, etc.). The key theme is normalization and consolidation of effort — instead of everyone reinventing the wheel, these services act as a hub. Leen itself highlights the pain point well: “There are a few thousand security products and it’s common for security teams to have dozens… Building integrations with dozens to hundreds of products, normalizing data across them, and taking actions in these systems requires significant engineering.” By offering a unified communication layer, they aim to drastically reduce that engineering overhead for consumers of security data.

Each of these solutions — and others emerging — addresses the integration bottleneck from a different angle. Whether it’s enabling simpler DIY integrations (Tines), embedding customizable workflows (AppMixer), outsourcing connector development (Sacumen), using open standards (osquery), or abstracting integrations behind a single API (Leen), the goal is the same: make it faster and easier to connect security tools while preserving (or enhancing) security. And importantly, many of these approaches can coexist. A security operations team might use osquery for endpoints, a SOAR or Tines for incident workflow, and a unified API service to feed data into their analytics pipeline, all at once. A product vendor might both embed a workflow engine for customers and use a connector service to expand their supported integrations list. There’s no one-size-fits-all answer, but there is a clear trend towards more flexible, security-focused integration strategies.

The Case for a Common Language: Normalization as Key

One lesson that cuts across these emerging solutions is the importance of a normalized communication layer. In plain terms, the more we can get our myriad security systems to speak a common language or interface, the easier integration becomes. We saw that with osquery giving endpoints a common query language, and with unified APIs standardizing data models. This concept isn’t new — consider how network protocols eventually converged on TCP/IP or how the database world standardized on SQL — but in cybersecurity, true standardization has been elusive so far due to the field’s breadth and rapid evolution.

However, efforts are afoot. The Open Cybersecurity Alliance, for instance, has been promoting open standards like STIX/TAXII for threat intel sharing and OpenDXL for messaging between security tools. Most recently a dicsussion is taking place as part of their OXA project to support open communication between security solutions. The ideal future state would be one where adding a new security tool is plug-and-play because it speaks the same data schemas and API patterns as the others. We’re not there yet, but the products discussed above indicate a recognition that we need a common layer of communication.

For builders of security technology, a takeaway is that embracing normalization can be a force multiplier. If you design your product to ingest or output data in common formats (or support standards alongside your proprietary schema), you make it inherently easier to integrate. If you adopt something like a unified API or open standard, you gain interoperability that can make your tool more attractive. It’s analogous to speaking a lingua franca in a foreign country — you’ll get much farther if you can communicate in a common language rather than expecting everyone to learn yours.

Normalized communication layers also encourage a healthier security ecosystem. When tools interoperate smoothly, security teams can automate end-to-end workflows that unleash the full value of their investments. Alerts can seamlessly trigger enforcement actions; analytics systems can crunch data from all sources in a unified view. The “platform fatigue” of jumping between consoles starts to fade. Instead, teams can build cohesive security operations centers where data flows to the right places and responses propagate quickly. This is the vision behind concepts like XDR (eXtended Detection and Response) — unifying formerly siloed tools into an integrated whole. Achieving that vision widely will require either standardization or widespread use of translators (like unified APIs) until standardization possibly takes hold.

Pushing for normalized layers, whether through industry standards, open-source projects, or middlewares, is a strategic imperative if we want to truly streamline integration efforts. It’s heartening to see initiatives and products moving in that direction. Every step towards a common language for cybersecurity products reduces the friction that currently slows down defenders.

AI Agent Communication: The Emergence of New Protocols

One of the most transformative shifts in recent months has been the introduction of AI agent communication protocols, most notably the Model Context Protocol (MCP). As AI systems increasingly take on automation, analysis, and decision-making roles within cybersecurity platforms, ensuring these AI agents can coordinate and communicate efficiently has become critical.

MCP is designed as a lightweight, standardized protocol for passing context and state between AI agents in multi-agent systems. By allowing different agents — whether hosted in separate models, tools, or environments — to exchange structured messages with a shared understanding of objectives, resources, and constraints, MCP represents a potential breakthrough in agent orchestration. This mirrors the way security integration platforms seek to create a common data layer: MCP tries to establish a common language for machine reasoning and coordination.

Other emerging frameworks in this space include:

OpenAI’s Function Calling and Assistant APIs — enabling agents to dynamically call external tools or invoke APIs as part of reasoning loops.

LangChain’s AgentExecutor and Tool abstractions — which manage prompt memory and tool access for chains of AI tasks.

AutoGPT and CrewAI-style multi-agent planners — where tasks are delegated across AI workers with defined roles and communication steps.

In the context of cybersecurity integration, these protocols may one day underpin AI-driven response systems where autonomous agents correlate events, perform triage, request additional data from threat feeds, and coordinate remediation actions — all without human intervention. The integration challenge here evolves into agent interop and trust: ensuring these intelligent components can operate reliably and securely across products.

In this future, your firewall, EDR, and cloud security tools might not just be integrated via API calls — they may each host specialized AI agents that reason locally and coordinate globally through protocols like MCP. The move toward protocolized AI integration is still nascent, but it’s a powerful concept, especially as AI becomes more embedded in every security layer.

Making Integration a Strategic Priority

The integration bottleneck in cybersecurity is not just a technical nuisance — it’s a strategic issue that impacts the effectiveness of security programs and the success of security product companies. As we’ve explored, failing to integrate tools properly leads to wasted investments, fragmented defenses, and burnout for the analysts stuck in between. Conversely, solving the integration challenge unlocks immense value: it allows organizations to leverage the full power of their security arsenal in concert, rather than as isolated point solutions.

For product managers and platform builders, the clear mandate is to treat integration capabilities as first-class features, not afterthoughts. This means planning for integrations early in the product roadmap, providing robust APIs for your own product, and possibly investing in one or more of the approaches discussed — whether it’s partnering with a connector service like Sacumen to rapidly expand your integration offerings, embedding a workflow/integration engine to let users customize workflows in-app, or integrating with unified API platforms to simplify data exchange. It also means designing with multi-tenancy and security in mind from the get-go: ensuring that any integration or automation you enable is done in a secure, isolated manner for each customer. The payoff is happier customers (because your product fits into their ecosystem with less effort) and a stronger competitive stance (because integration can be a selling point rather than a roadblock). As one example, after a major overhaul of its integration strategy, EclecticIQ (a threat intelligence platform) dramatically improved adoption — a real-world case where rethinking integration became a market differentiator. Integration work might not be as flashy as developing a new detection algorithm, but it can absolutely make or break a product’s success in the market.

For buyers and security leaders (CISOs, analysts), it’s important to demand integration-friendly solutions. When evaluating tools, dig into how well they play with others. Ask vendors about their APIs, their integration partners, and their support for standards. If a tool doesn’t integrate with your existing critical systems, factor in the engineering effort or additional products needed to bridge that gap — and weigh if it’s worth it. Also, consider consolidation not just in terms of reducing tool count for cost, but in terms of integration load: sometimes a slightly less feature-rich tool that integrates easily may provide more real-world security value than a siloed best-of-breed tool. Encourage your teams to document and prioritize integration requirements as part of your security architecture. And if you have the resources, explore some of the new solutions yourself — maybe a no-code tool can automate that tedious report compilation, or an open-source project can unify data from several internal tools. Security teams can be creative in gluing things together (some of the best integrations I’ve seen were basically a few Python scripts holding critical workflows together!), but giving them the right platforms and support will make those efforts more sustainable and secure.

Solving the integration challenge in cybersecurity comes down to collaboration — between tools, between teams, and across the industry. As someone who has wrestled with this problem firsthand, I’m heartened by the progress we’re seeing. Integration is no longer an afterthought; it’s becoming a key criterion and area of innovation. By approaching it with the same seriousness as we do detection algorithms or zero-trust architectures, we can turn integration from a bottleneck into a competitive advantage. The complex web of cybersecurity products can indeed be made to work in harmony — and when they do, security teams can finally focus on fighting attackers instead of fighting tool APIs. That is the real promise of conquering the integration puzzle, and it’s one within our reach.

Sources:

Senseon SOC Survey 2024 — Integration timelines and buying considerations

SiliconANGLE — Cybersecurity tool sprawl and integration woes

Cyber Defense Magazine — Sacumen on addressing integration hurdles

CrowdStrike — Osquery’s unified interface for endpoints

Leen (Unified API) — Normalization and unified data models benefits

Tines — API-centric automation eliminating complex integrations (via Tines blog)