- Huntbase Blog

- Posts

- Beyond the Bots: Reclaiming the Art of Investigation in the AI-Powered SOC

Beyond the Bots: Reclaiming the Art of Investigation in the AI-Powered SOC

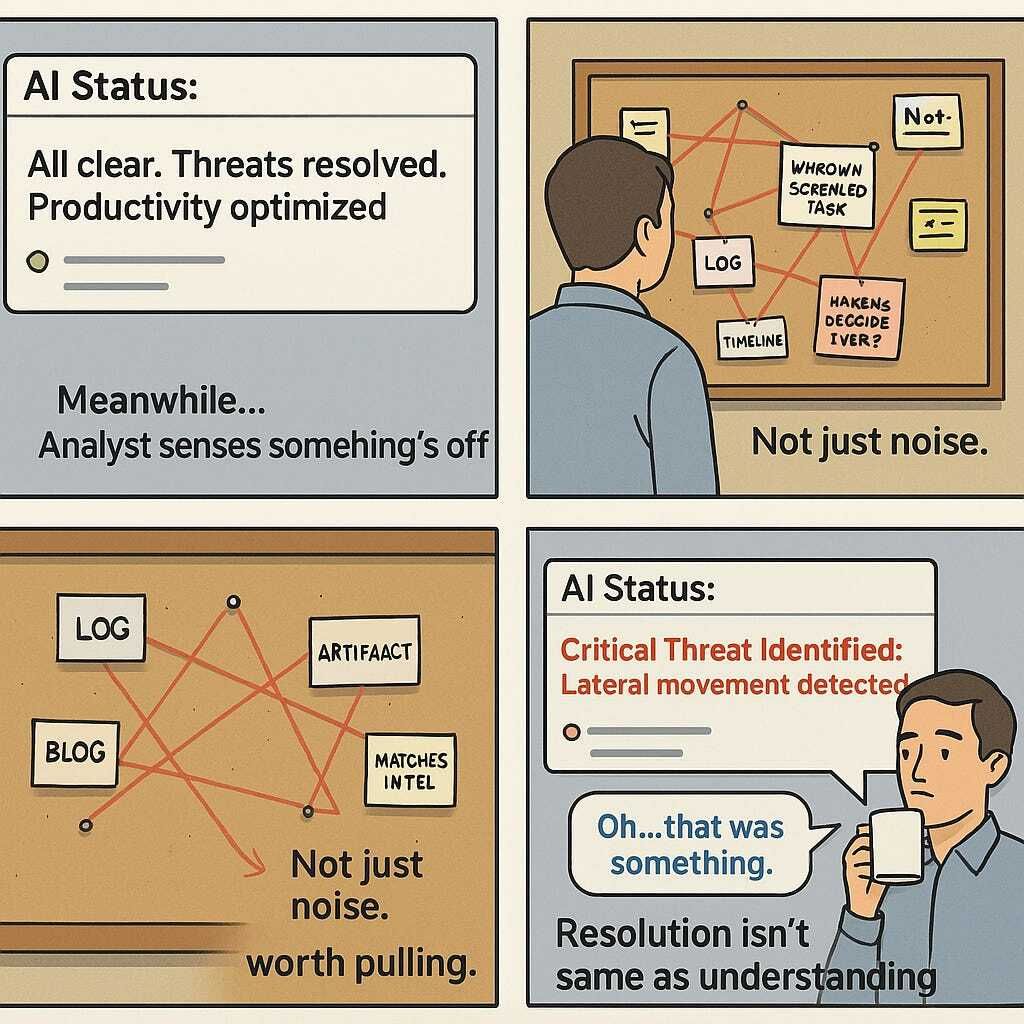

The Illusion of Resolution

If you’ve spent time in cybersecurity, you’ve heard the promises before: “reduce alert fatigue,” “automate detection of threats,” “resolve incidents instantly.” AI-powered SOC tools are riding high on these claims, positioning themselves as the ultimate fix for overloaded analysts. And who can blame security teams for wanting relief? After all, anyone who has stared down thousands of noisy alerts understands the appeal.

But there’s a catch — one that marketing hype conveniently glosses over. Automating away the routine doesn’t solve cybersecurity’s toughest problems; it merely shifts them. When AI clears the easy alerts, what’s left behind are complex incidents hidden in ambiguity, subtle adversaries quietly persisting, and threats without clear signatures.

As an incident responder earlier in my career at Mandiant, I saw countless security events that looked benign at first glance. Automation would have closed these quickly, marking them resolved. Yet beneath the surface, careful investigation revealed deeper truths: human adversaries leveraging social engineering, insider complicity, or sophisticated persistence methods that no automated logic would detect.

The promise of AI resolution can feel comforting, but it’s also dangerously incomplete. Automation alone won’t address the adversaries who carefully evade detection. In truth, the hardest — and most critical — work in cybersecurity begins exactly where automation ends.

Through the Eyes of an Incident Responder

Early in my career I investigated a phishing case that initially appeared straightforward — a compromised account from a single malicious email. Automated detection had flagged and seemingly contained the threat. Case closed, right?

But something felt off. Digging deeper, we noticed patterns that automation had missed. The victim wasn’t randomly targeted once; they were repeatedly approached over months/years, even in person, by someone posing as a friendly face — someone we later learned was an advanced threat actor. Connecting these dots took time, creativity, and intuition. Automation had no rule for spotting a friendly handshake or casual conversation that masked intent.

That investigation became incredibly complex, very quickly. It was only through careful manual analysis — examining past events, correlating subtle signals, and piecing together human context — that we understood the scope and nature of the attack. We found that the victim had unknowingly become the focal point of a sophisticated campaign, one that would have quietly persisted indefinitely without a human analyst’s intervention.

This experience reaffirmed for me that, while automation handles routine detection well, true security investigations often begin precisely where automation stops.

Automate the Mundane, Elevate the Analyst

Over the years, I’ve helped SOC teams around the world operationalize complex security products — from EDR and Threat Intelligence Platforms to DLP and XDR solutions. I’ve seen firsthand how automation can radically improve efficiency by handling repetitive tasks: routine enrichment, alert triage, basic response actions, and even simple user validations. These capabilities are incredibly valuable, and embracing them should be standard practice.

However, the prevailing mindset — “let’s automate away Tier-1” — is dangerously simplistic. Automation shouldn’t just clear tickets; it should elevate analysts. We need to shift from seeing automation as purely a reduction tool to viewing it as a force multiplier: something that makes junior analysts sharper and senior analysts faster.

Rather than eliminating entry-level work, automation should help new analysts rapidly acquire investigative skills. Imagine a new graduate analyst receiving immediate context alongside their first alert: not just raw data, but knowledge — domain insights, organizational history, and relevant external intelligence. Now, even an entry-level analyst can operate at a higher baseline, engaging in tasks typically reserved for their more experienced peers.

This approach transforms automation from a blunt instrument into a strategic tool, empowering analysts at every stage of their careers to do more impactful, insightful, and meaningful security work.

What AI Can’t (Yet) Learn: The Investigative Mindset

In the fast-moving security landscape, the most sophisticated threats aren’t noisy — they’re subtle and often unprecedented. Consider recent supply-chain attacks or the nuanced social engineering campaigns tied to geopolitical tensions. These threats rarely trigger traditional alarms because they rely on trust, human error, or vulnerabilities we haven’t yet recognized. Detecting them requires a flexible investigative mindset: the ability to form, challenge, and adjust hypotheses rapidly.

An effective investigator thrives precisely because they’re comfortable in ambiguity. They combine technical proficiency with intuition, creatively connecting dots from disparate data points — like correlating subtle registry modifications, obscure persistence methods, or new forensic artifacts from evolving cloud infrastructures. These are skills AI doesn’t yet possess, largely because they evolve in real-time alongside the threats themselves.

Until AI can truly understand human intent, context, and creativity, the investigative mindset will remain uniquely — and crucially — human.

The Quiet Cost of Over-Automation

While automation undeniably boosts efficiency, an over-reliance on it can quietly undermine security operations teams. Analysts may increasingly become passive observers, trusting AI to resolve threats without scrutiny. The recent rise in generative AI-driven phishing campaigns illustrates the danger: automated defenses may detect generic malicious content, but nuanced, personalized attacks crafted by language models can bypass automated detection precisely because they’re designed to evade known rulesets.

This scenario highlights a broader risk: by relying too heavily on automation, we erode analysts’ abilities to think critically, question findings, and uncover hidden threats. Over time, junior analysts — those most in need of developing investigative instincts — lose critical learning opportunities. This trend leaves organizations dangerously exposed when automation inevitably encounters novel threats or sophisticated adversaries.

A Better Vision: Tools as Investigative Companions

We need a different approach. Rather than relying solely on automation as a blunt tool, security teams must begin treating AI systems as investigative companions — partners that augment human intelligence rather than replace it.

Imagine AI systems surfacing insights rather than merely alerts. Recent vulnerabilities in widely used enterprise software, such as MOVEit Transfer or Confluence, demonstrate how crucial this capability could be. Rather than simply notifying analysts of vulnerabilities or exploits, what if AI connected dots proactively? A blog post about a recent CVE could be correlated automatically with subtle anomalies on a corporate network — perhaps benign alone, but suspicious when considered together. Analysts would receive contextual insights, not isolated alerts, immediately amplifying their investigative capabilities.

This is the future: transparent, explainable, collaborative tools that guide analysts toward deeper insights, equipping them with relevant knowledge at the exact moment it’s needed most.

As organizations embrace AI-powered security tools, the objective must evolve from simply automating workflows to defending with knowledge — knowledge of your environment, your organizational context, and the broader threat landscape.

Recent incidents, like ransomware groups exploiting zero-day vulnerabilities in Barracuda Email Security Gateways or common open-source libraries, highlight why this matters. In these scenarios, effective defense requires more than detection — it demands interpretation. Analysts must be able to correlate internal signals (anomalous behavior, device activity, privilege changes) with external intelligence (new CVEs, threat actor TTPs, geopolitical developments).

This is where many organizations hit a ceiling. Automation can reduce noise, but it rarely explains why something matters — or what to do next.

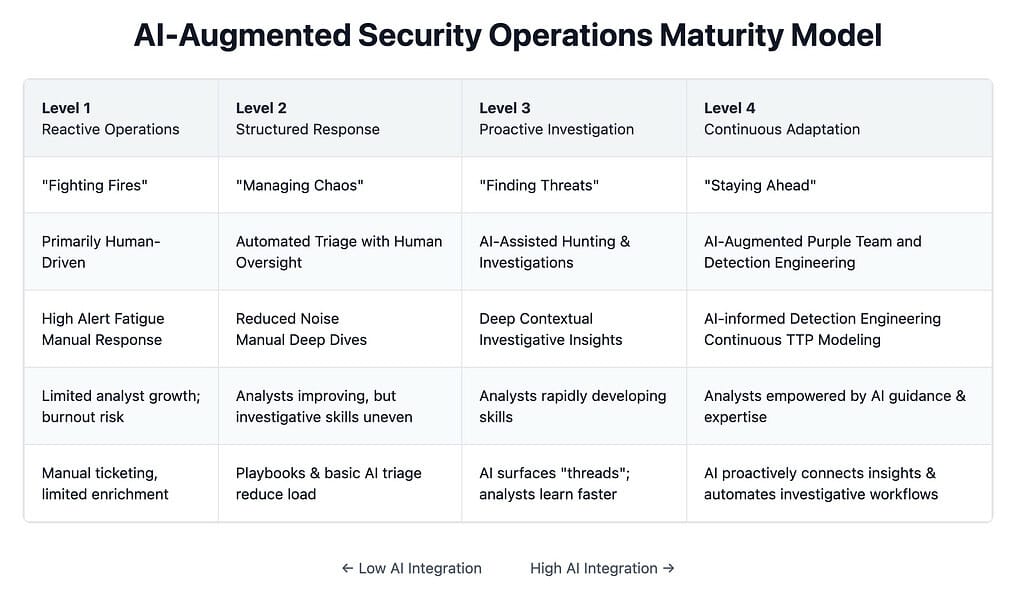

That’s why earlier-stage investment in AI-assisted investigation is so critical. As illustrated in our Security Operations Maturity Model, the biggest leap in security maturity doesn’t come from replacing humans — it comes from equipping analysts with context-rich insights. AI should not obscure investigation, but enhance it — surfacing threads to pull, highlighting possible connections, and shortening the distance between data and decision.

By empowering even early-career analysts with this level of support, organizations accelerate skill development, reduce investigation fatigue, and strengthen their resilience in the face of advanced threats.

The Human Analyst is Not Optional

Despite rapid advances, AI still has critical limitations, primarily because cybersecurity is an inherently adversarial domain. Unlike stable environments where automation thrives, cybersecurity threats evolve daily. Tomorrow’s threat landscape might emerge through generative-AI phishing attacks, deepfake impersonations, or entirely new classes of digital compromise we haven’t imagined yet.

Automation alone can’t anticipate or respond effectively to threats that defy existing detection rules or logic. Human analysts — equipped with creativity, intuition, and critical reasoning — will always remain essential, especially in moments of crisis or uncertainty. They are uniquely capable of adapting quickly, asking deeper questions, and uncovering truths hidden beneath layers of complexity.

Let’s invest in technology that elevates these analysts, enriches their knowledge, and expands their capabilities — not solutions that promise to replace them. The future of security is not fewer humans; it’s smarter humans, supported by intelligent systems that genuinely defend with knowledge.